Data model relationships are the core of Power BI. The report preparation and visualization becomes very easy if data modelling is done well. So here in this article, we will discuss data modelling relationship with a simple example.

A common misconception among Power BI users is that Power BI is all about visualization. But that is not the case. Visualization is only the icing on the cake. Whereas the data modeling part is the cake itself.

Interested in Machine Learning, Deep Learning, Artificial Intelligence? visit here.

You should give 75% of your time in data transformation and modeling. For data visualization rest 25% is enough. A good data model will enable you to dig into the data insight and to know the relationship between all the variables.

You can get a good detailed discussion on data modeling in Power BI here. The document is prepared by Microsoft itself. So no point to discuss the same thing here again. My target will be here to present you a small practical example of data modeling.

Use of multiple narrow tables

I will encourage you to use multiple narrow tables instead of a big flat table with several columns. And here lies the beauty of data modeling and relationships. Power BI can work with big wide table. But it is a kind of constrain while scaling the model.

With time your project is going to increase in size. The tables will increase in rows. Chances are there that table number will also increase as well as the complexity of your model. Fetching required information from a big table with lots of columns is time-consuming. It occupies a good amount of computer memory too.

In this scenario use of multiple tables with few columns can make your life easier.

Data model relationships in Power BI: from the Business Intelligence point of view

Business Intelligence or BI is of very critical importance in industries. Almost all businesses have a well-developed database to store all kind of transactions. Over time such databases get huge and extracting information becomes complex.

Extracting information from such big data requires experts in the field. Business houses appoint software developers to extract data, transform and load in a well-maintained data warehouse.

Creating a data warehouse is essential because no industry wants to disturb its always busy database. It may lead to jeopardies the real-time data transaction process. That’s why software developers keep their required information in a data warehouse of their own.

Now for a person without software development background it is tough to handle a data warehouse. Here comes the power of Power BI. It makes the whole ETL (Extraction, Transform and Load) process of data a cake walk for anyone with zero knowledge in software development.

A practical example of data model relationships in Power BI

Here is a simple example of how you can model relationships in Power BI. The data I used here is crop production data of different states of India. This data is accessible at Data.world. This is a real world data and without any garbage.

Data world already has provided the data in a refined manner. So, very little left for us on the part of data cleaning. And we can straight way jump into the modeling process.

Below is a glimpse of the data file imported into Power BI. This is the screenshot of the data transformation window of Power BI.

The columns are

- “State_Name” containing different states of India.

- “District_Name” containing state-wise different districts name

- “Crop_Year” has the year of production

- “Season” has the particular season of crop production

- “Crop” is the particular crop name

- “Area_ha” this column contains a total area of under the crop in Hectare

- “Production_tonnes” has a total production of the season in Tonnes

Now this is not a very big table. Still we can reduce its size and create a custom key to further improve the data model.

Creating a custom key

Using a column to create a custom key needs selecting a variable to create a separate table. Let’s select the “District_Name” to create a new table.

Now, why should I select this column? the answer is easy this column has some redundant values. The same way the “State_Name” also does not have unique values so either of them can be selected.

We will create a separate table with this column where they will be unique with a far lesser number of rows. In this way, these tables will help us data munging, data wrangling and whatever fancy term you want to use 🙂

Trimming the column

The first step is to trim the column to remove any unwanted white spaces from the column. Just select the column in question, right-click and select transform to get the trimming option. See the below image.

Removing the blank values

The second most important step is to replace any blank values. Blank values are a real problem in data analytics. Blank values can lead to spurious result as tools generally not capable to handle them. So, we should filter them out carefully in data transformation stage itself.

We can easily inspect the column quality and distribution from the view tab as shown in the below image.

So we can conclude that this particular variable is already free from any white space. See that data quality option shows “Empty as 0%”

But if your data has blank values then it is a convenient option to check it. For example, if we check the “Crop_production” variable we can see that the empty as <1%. That means it has some blank rows.

To check for the blank values we need to click the filter icon at the left of the column name. Uncheck the “Select All” option and check only the null values as shown in the above figure. Now Empty fields are 100%.

In order to replace the null values, you have to right click on the column name and select the “Replace Values…” option. In the new window replace the null with 0 and click OK. Now you can check the Empty has become 0% and all null values have been replaced by 0.

As no other column shows any value as Empty, so we have made our data completely free from any blank values. And we can now proceed for next step.

Creating new query and converting to table

In the next step we will create new query using the “Add as New Query” option. See the below image for the steps you should follow to create new query and then converting to a new separate table.

Removing duplicate entries

The newly created column has several duplicate entries. To make it a table with only unique entries, we need to remove the duplicates. As given in the below image, click the option “Remove Duplicates“. Now you have a column with all different districts of India.

Creating IDs for the column

It is very helpful creating a custom ID for the values in newly created column. Since the IDs are created by you, you have full control over it. Creating ID has a simple option in Power BI. See the following image to understand the steps.

The “Add Column” tab has options for both custom column and default column IDs starting from 0 or1. You can choose any of them. For example, here I have selected column ID starting from 0.

Merging the tables

The next step is to merge queries. As we have completed creating custom keys, now we need to merge the designated tables. See the following images to complete the tasks. Go to the “Home” tab and select “Merge Queries” option.

Now a “Merge” window will open and you need to verify that both the tables have exact same number of rows match. Here you can see that in both tables we have 246091 number of matching rows.

Now the newly created column will appear in the table. You can see in the below image that the whole table is displayed as a column. So you need to select the particular column and deselect the option “Use original column as prefix“.

Data model relationships in Power BI: task completed

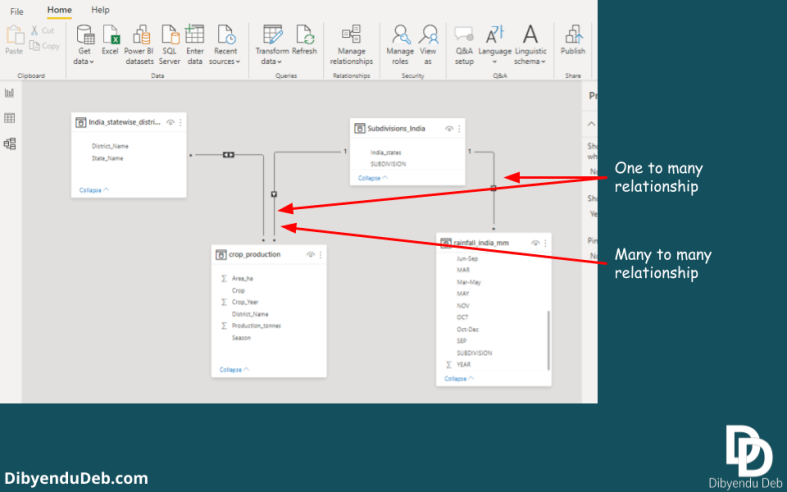

With the last step, we have completed creating a data model relationship between the two tables. You can verify that in the “Model” view of Power BI. It displays a “One to Many” relationship between the tables.

Final words

So, here is a simple example of data model relationships in Power BI with practical data. It also displays how can you create custom keys to join or merge into different tables. It is an effective way of creating a relationship as you have full control over the keys.

I hope this article will help you to start with data model relationships in Power BI with ease. In case of any doubt you can comment below. Also suggest if there any interesting topic regarding data modeling you have.

With this article, I am planning to start a series of articles over the Power BI application to solve real-world problems. So, keep visiting the website for new and interesting articles.